Understanding Responsible AI – Principles, impacts, and applications in a Ugandan Context

In June 2024, I had the opportunity to facilitate a two-hour lecture on Understanding Responsible AI: Principles, Impacts, and Applications in Uganda. A large number of people were present both online and in person at the Uganda Institute of Information and Communication Technology (UICT). Participants included representatives from UICT including 200 students, National ICT Innovation Hub, Najod Surveillance Systems, Kyambogo University, Gulu University, Uganda Police Force, Ministry of ICT & National Guidance, Elite Youth of Uganda, Design Hub, Mbarara Regional Referral Hospital, and various other organizations in the ICT, surveillance, marketing, supply chain, financial services, and agricultural sectors.

The lecture aimed to help a multi-stakeholder audience understand AI systems and learn how to design, develop, and deploy AI ethically. This article extends the lecture, exploring the definition of AI, its applications, associated risks, the principles of responsible AI, the application of AI in Uganda, and the next steps for Uganda. In addition, I will address the questions asked during the lecture in more detail. Let's start with a brief explanation of artificial intelligence.

What is Artificial Intelligence (AI)?

AI refers to the development of computer systems capable of performing tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and language translation. Furthermore, AI technologies built using programming languages such as Python, R, Java, Javascript, Julia, Lisp, Matlab, Prolog, and C++ are embedded in many tools and applications that significantly enhance our daily lives. Examples include but are not limited to the following.

- Virtual assistants – Siri, Alexa, and Google Assistant use natural language processing (NLP) to understand and respond to user queries, providing information, setting reminders, and controlling smart home devices.

- Recommendation systems – Netflix, Spotify, and Youtube utilize AI algorithms to analyze user behavior and preferences, suggesting movies, TV shows, music tracks, or videos tailored to our individual preferences.

- Search engines – Google Search uses AI to deliver relevant results, leveraging complex algorithms to rank web pages. Key factors include but are not limited to keyword relevance, page authority, user engagement, and content quality.

- Chatbots – Customer service bots on websites provide instant responses to user inquiries, improving customer support efficiency.

- Navigation apps – Google Maps, Apple Maps, and Waze use AI to provide real-time traffic updates, optimize routes, and estimate travel times.

- Text-to-speech – Speech synthesis applications convert written text into spoken words, which can facilitate accessibility especially for visually impaired users.

- Translation – Google Translate, DeepL, and similar tools break down language barriers by providing translations in numerous languages.

- Facial recognition – Security systems and social media platforms use facial recognition to identify individuals and tag photos automatically.

What is Generative AI?

Generative AI is a subset of AI based on machine learning models, such as large language models (llms), that can create new content, including text, audio, video, code, or images, based on user prompts (i.e., prompt engineering). These models are trained on vast datasets and can generate creative and contextually relevant outputs. Examples of Generative AI include but are not limited to the following.

- ChatGPT – By exploiting natural language processing, this AI chatbot allows its users to input prompts to generate humanlike images, text, or videos to complete various tasks.

- Google Gemini – A suite of AI tools for various applications, including natural language processing, summarization, code generation, and image generation.

- MidJourney – This platform is used for generating high-quality images based on text prompts.

- Microsoft Copilot – An AI assistant that helps people to boost productivity, find information, summarize documents and create image to enhance their lives and work experiences.

- Notion AI – This is an AI tool integrated into the Notion productivity software to assist with writing and organizing content.

- Claude.ai – This is an advanced chatbot that assists with tasks like writing, analysis, and coding.

Now that we have explored the foundational topics of AI and generative AI, let's delve further into AI systems and its constituents.

What is an AI System?

The OECD defines an AI system as follows.

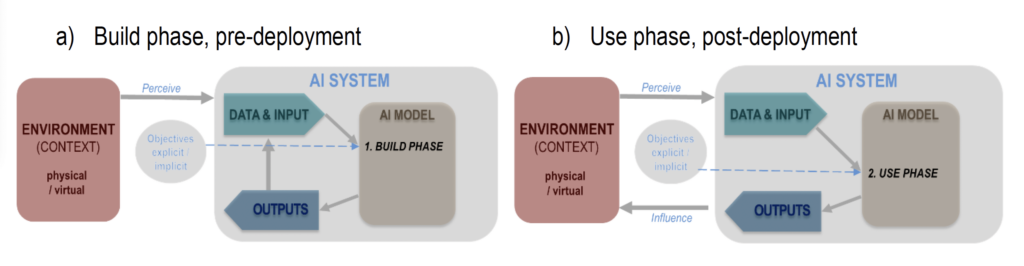

"An AI system is a machine-based system that for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment." – OECD, November 2023. Figure 1 illustrates an overview of an AI system.

Figure 1: An overview of an AI system adapted from OECD.

Note that in the context of OECD's definition of an AI system, AI systems can have both explicit and implicit objectives. Explicit objectives have been directly programmed into the system by human developers. In contrast, implicit objectives can arise from human-specified rules or through the system's ability to learn new objectives. For example, self-driving cars programmed to follow traffic rules indirectly protect lives without explicitly "knowing" this goal, which is an implicit objective.

Additional key aspects of AI systems include the following which are described below.

Inputs – Data and rules provided by humans or machines that are crucial for operating AI systems. These inputs are processed through models and algorithms to generate outputs. For example, in a visual object recognition system, inputs include the pixels of an image, which are passed through a deep neural network to classify the object in the image.

Outputs – The content generated by AI systems, including text, video, images, predictions, recommendations, or decisions.

Environment – The physical or virtual setting from which an AI system receives input and with which it interacts, providing real sensory inputs and accepting real actions from the AI system.

Moreover, in relation to the said OECD AI systems definition, AI systems are developed by humans but can adapt and set objectives independently to varying degrees. Autonomy is based on a system's ability to operate without human involvement, while adaptiveness allows AI systems to evolve their models after initial development. In this way, AI models can learn from new data and improve over time. Examples of adaptive AI include speech recognition systems that adjust to individual voices and personalized music recommender systems. This adaptability poses challenges for regulation, as it may invalidate initial performance and safety assurances, necessitating ongoing monitoring and testing throughout the system's lifecycle. For instance, AI systems can be trained once, periodically, or continually, potentially developing new inference abilities not initially envisioned by their developers, which could potentially be harmful.

To provide a comprehensive regulatory framework for AI technologies, the EU has introduced the EU AI Act. As a forerunner in AI regulation, the EU AI Act establishes a common regulatory and legal framework for AI. Proposed by the European Commission on 21 April 2021, it was approved by the European Parliament on 13 March 2024, and was unanimously approved by the EU Council on 21 May 2024. This regulatory framework aims to ensure the safe and ethical development, and use of AI technologies within the EU.

The AI Act encompasses all participants in the AI value chain, including providers, deployers, importers, distributors, and product manufacturers, which are defined below.

- Provider – A provider is defined as a natural or legal person, public authority, agency, or other entity that either develops an AI system or a general-purpose AI model, or has one developed, and then places it on the market or puts it into service under its own name or trademark, regardless of whether this is done for payment or for free. Based on Article 25 and Recital 84 of the Act, the scenarios in which these entities are treated as providers include:

- Branding: If they put their name or trademark on a high-risk AI system already available on the market or put into service.

- Substantial Modification: If they make a significant modification to an already marketed or deployed high-risk AI system.

- Change of Intended Purpose: If they alter the intended purpose of an AI system that was not initially classified as high-risk, resulting in its reclassification as high-risks.

- Deployer – This refers to a natural or legal person, public authority, agency, or other entity that uses an AI system under its authority, excluding instances where the AI system is used for personal, non-professional activities.

- Importer – This is defined as a natural or legal person located or established within the EU who places an AI system on the market, where the AI system bears the name or trademark of a natural or legal person established outside of the EU.

- Distributors – This is defined as a natural or legal person in the supply chain, other than the provider or importer, who makes an AI system available in the EU market.

The structured categorization of operators in the EU AI Act is relevant to Uganda for several reasons especially considering Uganda's ambitions to exploit AI to expedite the country's economic development. Aligning with the EU ACT can be used as framework to primarily protect its citizens from potential harms associated with AI, help establish clear responsibilities, ensure compliance with international standards, and foster innovation and trust in AI technologies within the country.

In this context, it is important to understand the specific risks associated with AI systems. Specifically, the risks outlined in the EU AI Act serve as a useful reference for guiding AI governance and assessing the risks of AI systems, despite its limitations. An overview of these risks is presented in figure 2 below.

Figure 2: An overview of the risks posed by AI systems as classified by the EU adapted from trail.

Figure 2: An overview of the risks posed by AI systems as classified by the EU adapted from trail.

These risks are described with examples below.

Unacceptable Risks – These risks are prohibited and examples are highlighted below.

- Real-time remote biometric identification – The use of AI for real-time identification of individuals in public spaces is banned, except for specific law enforcement purposes, due to privacy concerns and potential misuse.

- Emotional recognition – Restricted in workplaces and educational settings to prevent exploitation of emotional data and ensure privacy.

- Predictive policing – AI systems that assess the risk of future crimes pose ethical and privacy concerns, as they can lead to biased outcomes and unjust profiling.

- Scraping facial images – The untargeted collection of facial images for analysis or surveillance without consent is prohibited to protect individual privacy.

- Social scoring Systems – systems that evaluate individuals based on their behavior and assign scores that affect their opportunities are banned to prevent discrimination and social inequality.

High Risks – These AI systems are designed to function as a safety feature within a product, or the AI system itself is the actual the product. Examples taken from Annex III of the EU AI Act and this trail article are shown below.

- Critical Infrastructure – AI applications in transport and other critical sectors are strictly regulated to prevent life-threatening failures and ensure public safety.

- Employment Management – AI systems used for recruitment and worker management must be transparent and fair to avoid biased decisions that can affect employment opportunities.

- Essential Services – AI in credit scoring and other essential services must be reliable and fair, as biased decisions can deny individuals access to necessary services like loans.

- Law Enforcement – AI tools used in law enforcement must respect fundamental rights and ensure that their evaluations are accurate and unbiased.

- Migration Management – Automated systems for processing visa applications and other migration-related tasks must be thoroughly vetted to avoid unjust decisions and ensure fairness.

Limited Risks – Applications like chatbots that interact with users must clearly inform them that they are dealing with AI, ensuring transparency.

Minimal Risks – General AI applications, such as video games or spam filters, are considered minimal risk but should still adhere to ethical principles.

Now that we have explored the risks outlined in the EU AI Act, let's explore the definition of responsible AI.

What is Responsible AI?

Responsible AI involves the design, development, and use of AI in a manner that prioritizes ethical considerations, societal impact, fairness, transparency, accountability, sustainability, privacy, and professionalism. The goal is to ensure these technologies do not cause harm and align with human values. Below are some guiding principles of responsible AI that were derived from existing frameworks such as Microsoft's six guiding principles of responsible AI, the EU's High-Level Expert Group on AI presented Ethics Guidelines for Trustworthy Artificial Intelligence, Unesco's Recommendation on the Ethics of AI, and Center for AI and Digital Policy Universal Guidelines for AI.

Responsible AI Guiding Principles

- Fairness

Ensure AI systems are equitable and unbiased, providing equal treatment to all individuals. This involves: - Regularly auditing algorithms to detect and correct biases.

- Implementing diverse and representative datasets.

- Engaging with a broad range of stakeholders to identify and address potential inequities.

- Reliability and safety

Develop AI that functions consistently, handles unexpected situations safely, and resists manipulation. This includes: - Rigorous testing under various scenarios to ensure robustness.

- Implementing fail-safe mechanisms and redundancies.

- Continuous monitoring and updates to mitigate emerging risks.

- Privacy and security

Protect personal and business data, ensuring that AI operations maintain confidentiality and security. Key practices involve: - Applying data anonymization and encryption techniques.

- Ensuring compliance with data protection regulations.

- Implementing strict access controls and auditing mechanisms.

- Inclusiveness

Make AI accessible and beneficial to diverse individuals, including those with disabilities, ensuring that everyone can benefit from technological advancements. This entails: - Designing user interfaces that are accessible to people with different abilities.

- Providing training and resources to underserved communities.

- Encouraging diverse participation in AI development processes.

- Transparency and explainability

Ensure that AI decision-making processes are understandable and interpretable by both experts and users. This includes: - Documenting the design and training processes of AI models.

- Providing clear explanations of how AI systems reach their decisions.

- Implementing user-friendly tools that allow users to query and understand AI outputs.

"The term black box has been used to describe AI systems which are opaque and difficult to interpret. 'Explainability' requires that the logic behind algorithmic decision-making can be fully interpreted by experts and that this logic can be explained to users in accessible language." – Unesco Ethics of AI

- Accountability and assessment

Hold designers and operators of AI systems responsible for their impact and functionality, ensuring thorough evaluation before deployment. This involves: - Establishing clear lines of responsibility for AI systems' performance and outcomes.

- Conducting independent audits and impact assessments.

- Implementing mechanisms for addressing grievances and feedback from affected parties.

- Society and environmental well-being

Consider the broader social, societal, and environmental impacts of AI, promoting sustainability and minimizing negative effects. This includes: - Evaluating the environmental footprint of AI operations and seeking energy-efficient solutions.

- Assessing the social impact of AI deployments, particularly on employment and human rights.

- Promoting AI applications that contribute to societal good, such as healthcare and education.

- Human agency and oversight

Enhance human decision-making through AI while implementing oversight mechanisms to maintain control and accountability. This entails: - Ensuring humans remain in the loop for critical decisions.

- Designing AI systems that support and augment human capabilities rather than replace them.

- Implementing governance frameworks that provide clear guidelines for AI use and intervention protocols.

Additional information on the guiding principles of responsible AI can be garnered from the following articles 1, 2, and 3. In addition, I have listed key regulatory frameworks and tools to support responsible AI development below.

Council of Europe AI Treaty

International guidelines for AI development and use, promoting ethical standards and human rights. This treaty focuses on:

- Upholding human dignity, equality, and fairness in AI applications.

- Ensuring that AI technologies respect democratic values and the rule of law.

- Establishing mechanisms for cross-border cooperation and enforcement of ethical AI practices.

Executive Order on the safe, secure, and trustworthy development and use of artificial intelligence.

- This is a directive issued by the President of the United States aimed at ensuring the development and use of AI technologies in a manner that is safe, secure, and trustworthy. It outlines guidelines and strategies for federal agencies to promote responsible AI practices, mitigate risks, and enhance public trust in AI systems. The executive order likely includes measures to enhance AI transparency, accountability, and fairness.

United Nations (UN) General Assembly resolution on seizing the opportunities of safe, secure and trustworthy artificial intelligence systems for sustainable development.

- This resolution adopted by the UN General Assembly emphasizes the potential of AI to contribute to sustainable development goals. It calls for international cooperation to harness the benefits of AI while addressing associated risks. The resolution likely highlights the need for global standards and best practices to ensure that AI systems are developed and used in ways that are safe, secure, and trustworthy, contributing positively to economic and social development.

OECD AI Principles

Global principles for trustworthy AI, focusing on inclusiveness, sustainability, and respect for human rights. These principles encourage:

- The development of AI systems that are robust, secure, and safe throughout their lifecycle.

- Transparency and explainability to build public trust.

- Continuous monitoring and evaluation to mitigate risks and ensure positive societal impacts.

Unesco’s Ethics of Artificial Intelligence

Guidelines for ethical AI development, emphasizing transparency, accountability, and social impact. Unesco's framework includes:

- Ethical impact assessments to evaluate potential risks and benefits.

- Policies to ensure AI technologies are designed and implemented with consideration for human rights and ethical standards.

- Strategies to promote diversity and inclusion in AI development.

NIST AI Risk Management Framework

- Developed by the National Institute of Standards and Technology (NIST), the Artificial Intelligence Risk Management Framework Generative: Artificial Intelligence Profile, provides a structured approach to managing risks associated with AI. Moreover, the NIST AI Risk Management framework is designed to help organizations understand and manage the risks posed by AI technologies to individuals, organizations, and society. It includes guidelines for evaluating and mitigating risks related to AI ethics, privacy, security, and bias.

Unesco's Impact Assessment

Tools for assessing the societal impact of AI technologies. These tools help organizations:

- Measure the effects of AI on various aspects of society, including employment, privacy, and social equality.

- Identify potential negative impacts and develop mitigation strategies.

- Ensure that AI technologies contribute positively to sustainable development goals.

Responsible AI tools for TensorFlow

Open-source tools to help developers create responsible AI models. These tools provide:

- Features for bias detection and mitigation.

- Capabilities to ensure AI systems' fairness and transparency.

- Resources to integrate ethical considerations into the AI development lifecycle.

Fairlearn

A toolkit for assessing and mitigating unfairness in AI systems. Fairlearn offers:

- Methods to analyze the fairness of AI models.

- Algorithms to reduce bias and ensure equitable outcomes.

- Visualization tools to help developers understand and communicate fairness metrics.

Microsoft's responsible AI dashboard

A platform for monitoring and evaluating the ethical aspects of AI systems. This dashboard enables:

- Continuous tracking of AI systems' performance against ethical benchmarks.

- Detailed reporting on model performance, fairness, data analysis for over or underrepresentation identification, error analysis, accountability, and transparency metrics.

In essence, by complying with these guiding principles we can develop AI systems that are not only technically sound but also ethical and beneficial to society, and are aligned with human values and rights. Let's now dive deeper into the risks of AI Systems to Africa, which were also discussed in my recommendations to the African Union for their Continental Strategy on AI.

Risks of AI Systems to Africa

1. Digital colonialism – refers to a situation where large-scale tech companies from developed nations extract, analyze, and own user data from less developed regions for profit and market influence, with minimal benefit to the data source. This modern form of colonialism parallels the historical "Scramble for Africa," where European powers divided and exploited African resources.

Moreover, many African countries often lack robust data protection laws and infrastructure ownership, allowing Western tech companies to exploit data with minimal oversight. Key issues include:

- Ill-equipped data protection laws

- Many African nations have underdeveloped or non-existent data protection regulations.

- This gap allows for the unregulated extraction and use of data.

- Infrastructure ownership by western companies

- Western tech firms often own critical infrastructure, giving them control over data flows and access.

2. Cobalt mining in the Democratic Republic of Congo (DRC) – Cobalt is a key component in lithium-ion batteries, powering mobile devices, electric vehicles, data centers, and portable AI devices, all of which run AI applications. Notably, seventy percent of the world's cobalt is produced in the DRC. However, the demand for cobalt, driven by the growth of AI and other technologies, raises significant ethical and human rights concerns. The majority of cobalt is mined in the DRC, where mining operations are often linked to severe human rights abuses, including child labor and unsafe working conditions. Additionally, cobalt mining can cause substantial environmental damage, such as habitat destruction and pollution. As such, tech companies are increasingly pressured to ensure their supply chains are free from unethical practices and to source cobalt responsibly.

3. Dependency on foreign technologies – Heavy reliance on AI technologies from the global North can lead to several risks including but not limited to the following.

- Economic inequalities – Local industries might struggle to compete, exacerbating economic disparities.

- Security concerns – Critical infrastructure and sensitive data controlled by foreign entities pose national security risks.

- Ethical and governance issues – Local regulations may lag behind, leading to misuse and lack of accountability.

- Health inequalities for example with diagnostic tools – AI diagnostic tools developed with datasets from the global North may not be as accurate for African populations due to genetic, environmental, and lifestyle differences. For example, skin cancer detection algorithms trained on lighter skin tones may not perform well on darker skin tones.

- Agricultural inequalities – Precision agriculture tools designed for temperate climates may not be directly applicable to African climates. Local adaptation is needed to account for varying climate conditions and soil types.

- Language barriers – Educational software often uses languages and cultural references familiar to students in the global North, which may not be relevant or easily understood by African students.

- Limited infrastructure capabilities – Many educational technologies assume access to high-speed internet and modern devices, which may not be available in rural or underserved areas in Africa.

Additional risks of AI technologies

- Surveillance – Governments and organizations can use AI to conduct extensive surveillance on populations, leading to privacy invasions and potential abuses of power, especially for marginalized groups.

- Psychological manipulation – AI can manipulate behavior through personalized advertisements and content, exploiting psychological weaknesses for profit.

- Privacy and security risks – AI systems can compromise data security and privacy, leading to potential breaches and misuse.

- Deepfakes – AI-generated deepfakes can create and spread misinformation, influencing public opinion and election outcomes.

- AI fraud – AI systems can be used for fraudulent activities, such as AI-enabled scams in financial institutions, like the Bank of Uganda.

- Bias amplification – AI systems trained on biased datasets can perpetuate and amplify existing biases, misrepresenting diverse groups in multicultural and multilingual African societies. Training data originating from the Global North may not reflect Africa’s cultural, linguistic, or systemic realities, leading to irrelevant and ineffective AI systems in African contexts.

Mitigating AI Risks in the Ugandan Context

To address the unique challenges posed by AI in Uganda, several targeted strategies can be implemented:

- Modify data protection laws – Update the Data Protection and Privacy Act, 2019, and the Data Protection and Privacy Regulations, 2021, to include provisions specific to AI. This ensures comprehensive legal coverage and protection for citizens against AI-related data misuse.

- Capacity building – Invest in training programs to build AI literacy and expertise among professionals. This will enable them to develop, deploy, and manage AI systems responsibly, fostering local innovation and reducing dependency on foreign technologies.

- Explainable AI – Promote the development and use of AI systems that provide clear, understandable explanations for their decisions. This enhances transparency and trust, making it easier for users to understand and accept AI-driven outcomes.

- Regulation of high-risk AI systems – Implement stringent regulations for high-risk AI applications to ensure they meet safety, fairness, and transparency standards. This is crucial for applications that impact critical areas such as healthcare, finance, and public safety.

- High-quality datasets – Ensure the datasets used to train AI systems are of high quality, representative, and free from biases. This minimizes discriminatory outcomes and improves the overall effectiveness and fairness of AI applications.

- Localization of datasets – Develop and use local datasets to train and fine-tune AI models, ensuring they are relevant and effective in the local context. This enhances the performance of AI systems in addressing Uganda's specific needs and challenges.

- Use synthetic data – Where it is impossible to access real data, utilize synthetic data to train AI models. Synthetic data can help overcome data scarcity issues while maintaining privacy and mitigating risks associated with using sensitive real-world data.

- Exploit open data sets – Leverage open data sets available from various repositories to enhance AI development. Examples of African repositories such as the the Africa Open Science Platform (AOSP), openAfrica, and Data Africa, which provide valuable datasets for research and innovation.

- Detailed documentation – Provide comprehensive documentation for AI systems, detailing their purpose, functionality, and compliance with regulatory standards. This documentation is essential for accountability and for understanding how AI systems operate.

- Human oversight – Implement appropriate human oversight measures to monitor AI systems, ensuring they operate as intended and mitigating risks. Human oversight is vital for intervening in case of unexpected behaviors or errors in AI systems.

Implementing these strategies will help Uganda harness the benefits of AI technology while mitigating potential risks, promoting sustainable development, and ensuring ethical and equitable use of AI. Let's now explore some examples of the sectoral impact of AI technologies in Uganda.

Sectoral impact of AI in Uganda

The integration of AI technologies across various sectors in Uganda is fostering significant advancements, enhancing efficiency, and addressing local challenges.

Health

- Digital X-ray systems – AI-equipped digital X-ray systems are playing a crucial role in enhancing tuberculosis (TB) screening in remote areas. These systems use AI algorithms to analyze X-ray images, improving diagnostic accuracy and access to healthcare services. For more information on the use of AI in TB screening.

- Malaria diagnosis – AI systems are being used to analyze blood samples for malaria, providing accurate and timely diagnoses. These systems enhance the ability of healthcare providers to detect malaria cases early and administer treatment promptly.

Agriculture

- AI-Powered Farmshield – Tools like Synnefa's Farmshield use AI and drones to collect and analyze data on soil conditions, weather patterns, and crop growth. This information enables farmers to make informed decisions about planting, irrigation, and fertilization, ultimately improving crop yields and sustainability.

Education

- Muele Makerere University, Hadithi Hadithi!, Checheza – AI applications in education are transforming learning experiences by providing personalized content, interactive learning platforms, and adaptive assessments. These tools cater to individual learning needs, enhancing educational outcomes.

Banking

- Centenary bank – The bank has invested $14 million in a core banking system that uses AI to predict customer needs and recommend products based on their financial journey. This investment is aimed at enhancing customer satisfaction and operational efficiency.

- FINCA Uganda – In partnership with Proto and Eclectics, FINCA Uganda has deployed an AI Customer Experience solution featuring the chatbot Flora. This solution automates customer service tasks across multiple digital platforms, improving efficiency and customer engagement.

From the aforementioned examples, Uganda is advancing across various sectors through AI, driving innovation, and enhancing the quality of life for its citizens. Let's now dive into how Uganda can build capacity through a multi-stakeholder approach.

Building AI Capacities in Uganda's AI Eco-system

Attracting foreign direct investment

To attract foreign direct investment in AI, Uganda should focus on the following.

- Fair regulation and demonstrable AI ethics – Implementing fair regulations and demonstrating a commitment to AI ethics.

- Effective data protection laws – Establishing strong data protection laws that ensure high levels of data security, thus building investor confidence.

Harnessing the private and public sectors

The private sector plays a crucial role in advancing responsible AI innovation and scaling up solutions. Key actions include:

- Responsible Innovation – Encouraging private companies to develop and implement responsible AI solutions.

- Public Procurement Requirements – Enforcing transparency and accountability in public procurement processes to ensure ethical AI practices.

- Technology transfer agreements – Ensuring technology transfer agreements uphold local needs and values, focusing on skills development, job creation, social impact assessments, fair labor practices, and the involvement of trade unions.

Building AI literacy in Uganda's education system

To prepare Uganda's future workforce for the digital age, it is essential to foster AI literacy among both teachers and students. Key initiatives may include the following.

- Early education in coding, robotics, and computing – Introducing coding, robotics, and basic computing skills at an early age to build a strong foundation in technology.

Building AI capacities in higher education

Curriculum development

Expanding and updating higher education curricula to include AI-related courses is essential. This may include the following.

- Interdisciplinary approach – Incorporating AI courses across various disciplines, including engineering, computer science, humanities, social sciences, and medical fields. This holistic approach ensures that students from diverse backgrounds can gain an understanding of AI and its applications.

Faculty training

Empowering educators with comprehensive training programs is critical to effectively teaching AI through training programs.

- Training Programs – Implementing training programs that equip faculty with the skills and knowledge to leverage AI technologies responsibly. This enables them to guide students in understanding and applying AI in various contexts.

Funding and research

Increasing funding for AI research fosters innovation and responsible applications through the following.

- Research Funding – Allocating more funds to AI research to drive innovation and explore practical applications.

- Clear Data Policies – Establishing clear data policies in research institutions to ensure data quality and integrity, which are essential for credible and impactful research.

Supporting start-ups and innovation hubs

Encouraging the development of AI solutions and start-ups through innovation hubs and incubators within universities.

- Innovation hubs and incubators – Creating and supporting spaces where students and researchers can develop AI technologies and start-ups. This initiative bridges the gap between theoretical knowledge and practical application, fostering a culture of innovation and entrepreneurship.

Focusing on these areas will enable Uganda to build robust AI capacities across its education system, private sector, and public sector, ensuring that individuals are well-equipped to navigate and contribute to the rapidly evolving field of artificial intelligence.

In summary, responsible AI emphasizes inclusivity, ethical decision-making, privacy, data protection, fairness, transparency, and accountability. These principles are crucial for fostering user trust, avoiding legal issues, promoting equity, and enhancing an organization's reputation. While responsible AI faces challenges such as addressing bias, privacy concerns, and complex regulations, it is essential for upholding human values and ensuring technology is used responsibly, especially in the Global South.

In Uganda, the integration of AI technologies presents both opportunities and risks across various sectors, including health, agriculture, education, and banking. As such, building AI capacities in the education system, marginalized communities, and both the public and private sectors can help Uganda cultivate a generation equipped to leverage AI responsibly. Early education in coding, robotics, and computing, coupled with robust technology transfer agreements, can align technological advancements with local needs and values.

Attracting foreign direct investment through fair regulations, demonstrable AI ethics, and effective data protection regimes is vital for economic growth. Moreover, regulatory frameworks such as the EU AI Act, Council of Europe AI Treaty, OECD AI Principles, and Unesco’s Ethics of Artificial Intelligence provide valuable guidelines that Uganda can adapt to ensure comprehensive legal coverage and protection for its citizens.

Despite the challenges, the Uganda's commitment to responsible AI is imperative. Addressing bias, privacy concerns, and navigating complex regulations will require continuous effort and collaboration through a multi-stakeholder approach. Ultimately, the use of AI in Uganda must prioritize human rights, equity, and ethical standards to ensure it benefits all segments of society.

The ultimate goal is for Uganda to harness the potential of AI to drive sustainable development, improve quality of life, and contribute to the global AI ecosystem. The time for action is now, and with a concerted effort, Uganda can emerge as a leader in responsible AI adoption and innovation.

Written by: